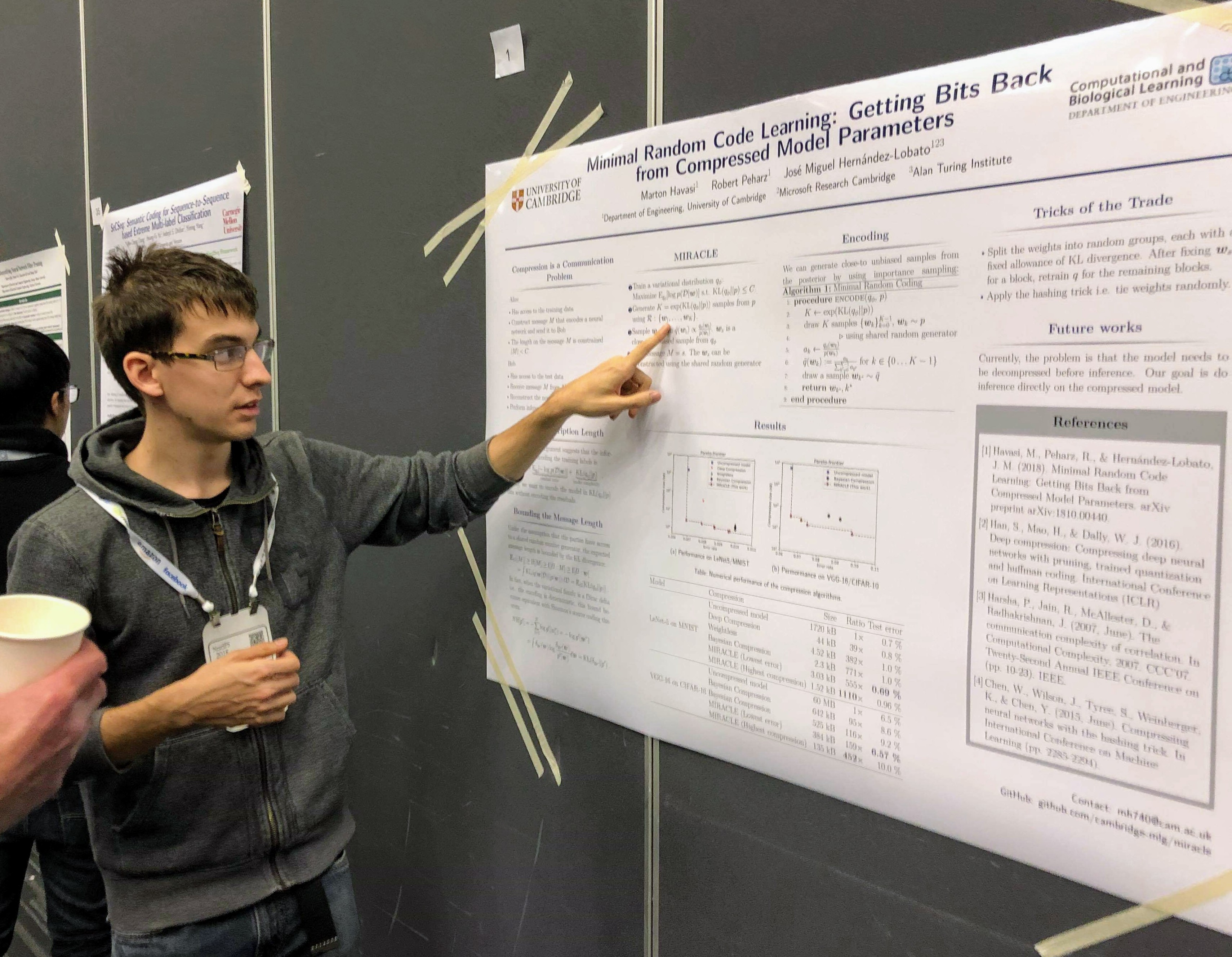

Marton Havasi

I am currently a Perception system engineer working on object detection (lidar + computer vision) for autonomous vehicles. My research background is in neural compression, probabilistic methods, reliable deep learning and interpretability.

Quick links: Google scholar, LinkedIn